What is Function Calling?

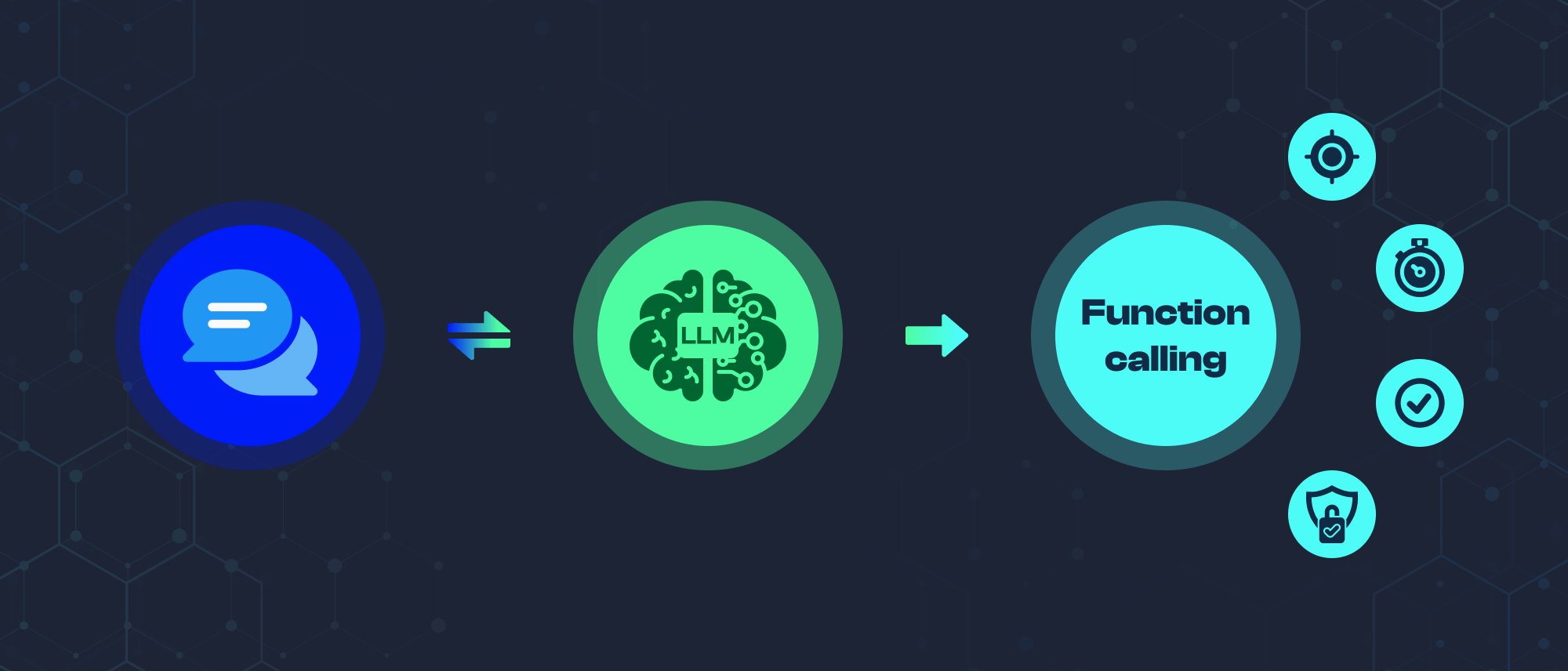

Function Calling is a powerful feature in large language models (LLMs) that enables them to interact with external tools, APIs, and databases. This capability allows LLMs to extend their problem-solving abilities beyond their training data, accessing real-time information and performing complex operations.

At its core, Function calling in AI is the ability of an LLM to recognize when a specific external function or tool is needed to complete a task and to formulate appropriate inputs for that function. This bridges the gap between natural language understanding and practical, real-world actions.

Key Aspects of Function Calling

1. Identification: The LLM must accurately identify situations where an external function call is required to fulfil a user's request.

2. Selection: From a set of available functions, the model needs to choose the most appropriate one for the task at hand.

3. Parameter Population: Once a function is selected, the LLM must generate suitable parameters based on the context and user input.

4. Execution: The chosen function is called with the provided parameters, typically through an API or other interface.

5. Integration: The LLM incorporates the function's output into its response, presenting it coherently to the user.

6. Error Handling: The model should be able to handle and communicate any errors or unexpected results

7. Context Preservation: Throughout the function calling process, the LLM must maintain the conversation's context and user intent.

How Does Function Calling Work?

The process of function calling in LLMs involves several steps:

1. Input Analysis: The LLM analyzes the user's input to determine if external data or computation is required.

2. Function Matching: If a function is needed, the model searches its available set of functions to find the most relevant one.

3. Parameter Extraction: The LLM extracts or infers the necessary parameters from the user's input and conversation context.

4. Function Call Formulation: The model constructs a proper function call with the selected function and extracted parameters.

5. Execution: The function is called, typically through an API managed by the system hosting the LLM.

Walk away with actionable insights on AI adoption.

Limited seats available!

6. Result Processing: The LLM receives the function's output and processes it to understand its implications.

7. Response Generation: Finally, the model generates a response that incorporates the function's output and addresses the user's original query.

This process allows LLMs to seamlessly combine their language understanding capabilities with external tools and data sources, greatly expanding their utility.

Practical Applications and Use Cases

Function calling makes AI assistants more capable in various real-world scenarios:

1. Real-time Information Retrieval:

- Weather updates: Getting current conditions for any location.

- Financial data: Checking the latest stock prices or exchange rates.

- News: Accessing recent headlines on specific topics.

2. Complex Calculations:

- Financial planning: Calculating loan payments or investment returns.

- Scientific computations: Solving equations in physics or chemistry.

- Engineering: Performing stress analysis or circuit calculations.

3. Database Interactions:

- Customer management: Looking up or updating client information.

- Inventory tracking: Checking stock levels or product details.

- Content management: Retrieving or modifying website content.

4. API Integrations:

- Online shopping: Checking product availability or processing orders.

- Travel booking: Searching for and reserving flights or hotels.

- Social media: Posting updates or checking account statistics.

5. Smart Home Control:

- Device management: Controlling lights, thermostats, or security systems.

- Automation: Setting up routines or monitoring energy usage.

6. Data Analysis and Visualization:

- Business reports: Creating charts or analyzing trends.

- Research: Processing and visualizing experimental data.

7. Language Services:

- Translation: Converting text between languages in real-time.

- Speech processing: Changing spoken words to text or vice versa.

8. Scheduling and Calendar Management:

- Appointment booking: Finding free time slots and scheduling meetings.

- Event planning: Creating events and sending invitations.

These applications show how function calling transforms AI from a simple chat tool into a powerful assistant that can perform complex tasks across various fields.

Walk away with actionable insights on AI adoption.

Limited seats available!

Managing Ambiguous Function Calls in LLMs

Sometimes, when people ask questions or give instructions to a smart computer assistant (like Siri or Alexa, but more advanced), they might not be completely clear. The computer needs to handle these situations carefully. Here's how it can do that:

1. Asking for More Information: When the computer isn't sure what you mean, it will ask you to explain more. It might say something like, "Could you tell me more about that?" or give you a few choices to pick from.

2. Using Clues from Earlier Conversation: The computer remembers what you've been talking about. It uses this information to make good guesses about what you might mean now.

3. Making Reasonable Guesses: If you leave out some details, the computer will use common sense to fill in the blanks. For example, if you ask about the weather but don't say where it might assume you mean your current location.

4. Combining Different Tools: Sometimes, to answer a vague question, the computer might need to use several of its tools or abilities together.

5. Ranking Possible Meanings: When your request could mean different things, the computer gives each possibility a score. It then chooses the one that seems most likely to be right.

6. Explaining Its Thinking: The computer tells you how it understood your request and what assumptions it made. This helps you know if it's on the right track.

7. Double-Checking Important Actions: If you ask the computer to do something important or that can't be undone easily, it will ask you to confirm before going ahead.

By handling unclear requests in these ways, the smart computer assistant can be more helpful and trustworthy, even when you're not sure exactly how to ask for what you need.

This approach helps the computer give accurate and useful responses, even when your questions or instructions aren't perfectly clear. It's like having a helpful assistant who's good at figuring out what you mean, even when you're not quite sure how to explain it yourself.

Conclusion

However, managing function calls, especially in ambiguous situations, requires careful contextual analysis, parameter matching, and error handling. By leveraging these strategies, LLMs can accurately select and execute the appropriate functions, providing more accurate and relevant responses.

Overall, function calling bridges the gap between natural language understanding and real-world task execution, positioning LLMs as indispensable tools for both developers and end-users in diverse domains.

FAQ

1. What is Function Calling in LLMs?

Function calling allows Large Language Models to interact with external tools and APIs, extending their capabilities beyond language processing to perform real-world tasks and access up-to-date information.

2. How does Function Calling improve AI assistants?

Function Calling enables AI assistants to perform complex tasks like retrieving real-time data, making calculations, and interacting with databases, transforming them from simple chatbots into powerful, versatile tools.

3. How do LLMs handle ambiguous function calls?

LLMs manage ambiguous calls by asking for clarification, using context clues, making reasonable assumptions, combining tools, ranking possibilities, explaining their reasoning, and confirming important actions.

Walk away with actionable insights on AI adoption.

Limited seats available!