The emergence of powerful AI development tools has transformed how developers build AI applications. LangChain vs. LlamaIndex represents a key decision point for developers looking to create and deploy large language model (LLM)-powered applications using frameworks like Transformers, vLLM, or SGLang.

While both frameworks cater to distinct needs in the AI landscape, they also share areas of overlap. This blog explores their features, use cases, and nuances to help you choose the right tool for your project.

What is LangChain?

LangChain is a framework for developing applications powered by large language models (LLMs). Known for its flexibility and modularity, LangChain is designed to help developers build complex AI workflows. It excels in tasks involving generative AI, retrieval-augmented generation (RAG), and multi-step processes.

LangChain simplifies every stage of the LLM application lifecycle:

Development: Build your applications using LangChain's open-source building blocks, components, and third-party integrations. Use LangGraph to build stateful agents with first-class streaming and human-in-the-loop support.

Productionization: Use LangSmith to inspect, monitor and evaluate your chains, so that you can continuously optimize and deploy with confidence.

Deployment: Turn your LangGraph applications into production-ready APIs and Assistants with LangGraph Cloud.

What is LlamaIndex?

LlamaIndex is a framework for building context-augmented generative AI applications with LLMs including agents and workflows. Primarily focuses on data indexing, retrieval, and efficient interaction with LLMs. It simplifies the process of integrating LLMs with structured and unstructured data sources for seamless querying and data augmentation.

Advanced Use Cases and Strengths

When evaluating LangChain vs. LlamaIndex, understanding their advanced use cases and strengths is crucial:

Walk away with actionable insights on AI adoption.

Limited seats available!

Use Cases and Strengths of LangChain

1. Multi-Model Integration: Supports OpenAI, Hugging Face, and other APIs, making it versatile for applications requiring diverse LLM capabilities.

2. Chaining Workflows: Offers sequential and parallel processing with memory augmentation, ideal for conversational agents and task automation.

3. Generative Tasks: Specializes in creative outputs, such as text generation, summarization, translation, and even composing poetry or writing code.

4. Observability: LangSmith allows for advanced debugging and real-time monitoring of AI workflows, ensuring high reliability.

Use Cases and Strengths of LlamaIndex

1. Indexing and Search: Excels at organizing and retrieving large datasets, with the ability to handle domain-specific embeddings for improved accuracy.

2. Structured Queries: Provides tools like ‘RetrieverQueryEngine’ and ‘SimpleDirectoryReader’ for querying diverse document formats efficiently.

3. Interactive Engines: Features like ‘ContextChatEngine’ enable dynamic interaction with stored data, making it suitable for Q&A systems.

4. Integration with Vector Stores: Seamless compatibility with vector databases like Pinecone and Milvus for enhanced retrieval.

Decision Factors To Consider

1. For Workflow Complexity: If your application involves multi-step logic, advanced chaining, and memory management, LangChain is the better choice.

2. For Search and Retrieval: If your goal is to build an application focusing on document indexing and efficient querying, LlamaIndex excels.

3. Budget and Cost: LangChain is more cost-efficient for embedding large datasets, while LlamaIndex is optimized for recurring queries.

Walk away with actionable insights on AI adoption.

Limited seats available!

4. Lifecycle Management: LangChain provides better granular control over lifecycle processes like debugging and monitoring.

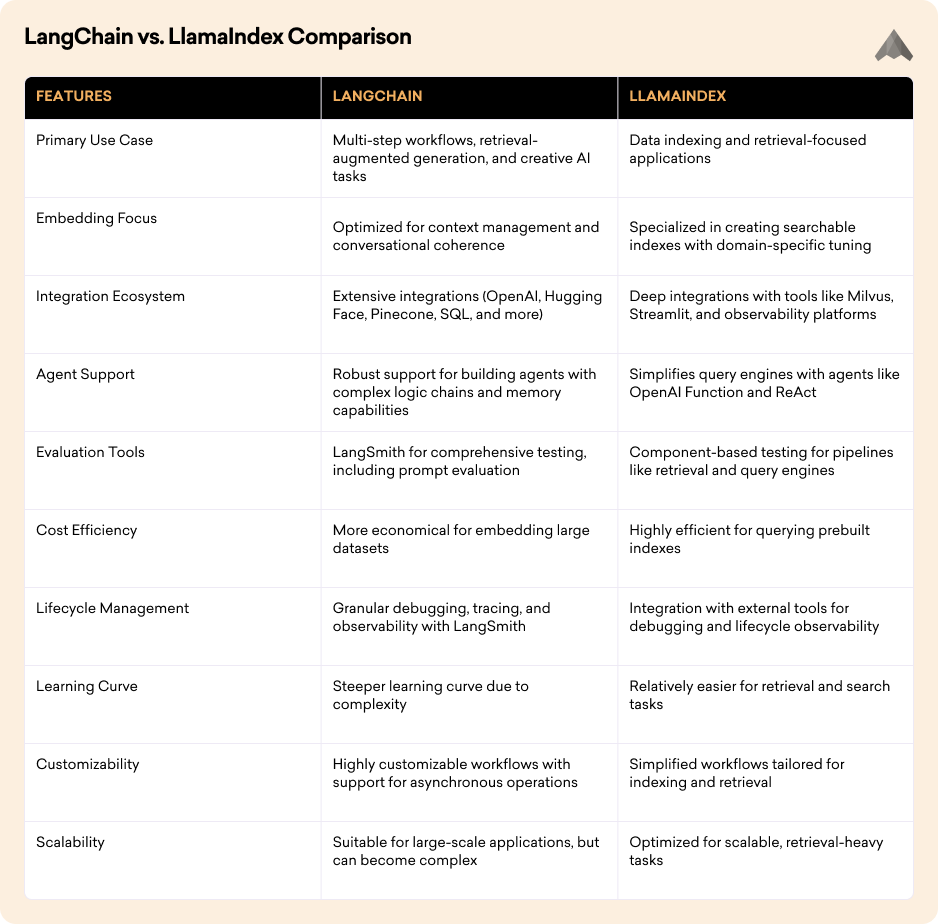

LangChain vs. LlamaIndex Comparison

To make an informed choice in the LangChain vs. LlamaIndex debate, let's examine their key features side by side:

Can LlamaIndex and LangChain Work Together?

Yes! Many developers use both frameworks synergistically. For example, LlamaIndex can handle indexing and retrieval, while LangChain can manage downstream generative tasks or logic chains. This hybrid approach leverages the strengths of both tools for complex AI applications.

Our Final Words

When evaluating LangChain vs. LlamaIndex, both serve distinct purposes but can complement each other in AI development. Your choice depends on specific project requirements, budget, and scalability goals. For a production-ready application, combining their capabilities might yield the best results.

Explore more about LangChain and LlamaIndex to get started with your next AI project.

Walk away with actionable insights on AI adoption.

Limited seats available!